Vision-Language Model in Deep RL

Improving Explainability in Deep Reinforcement Learning

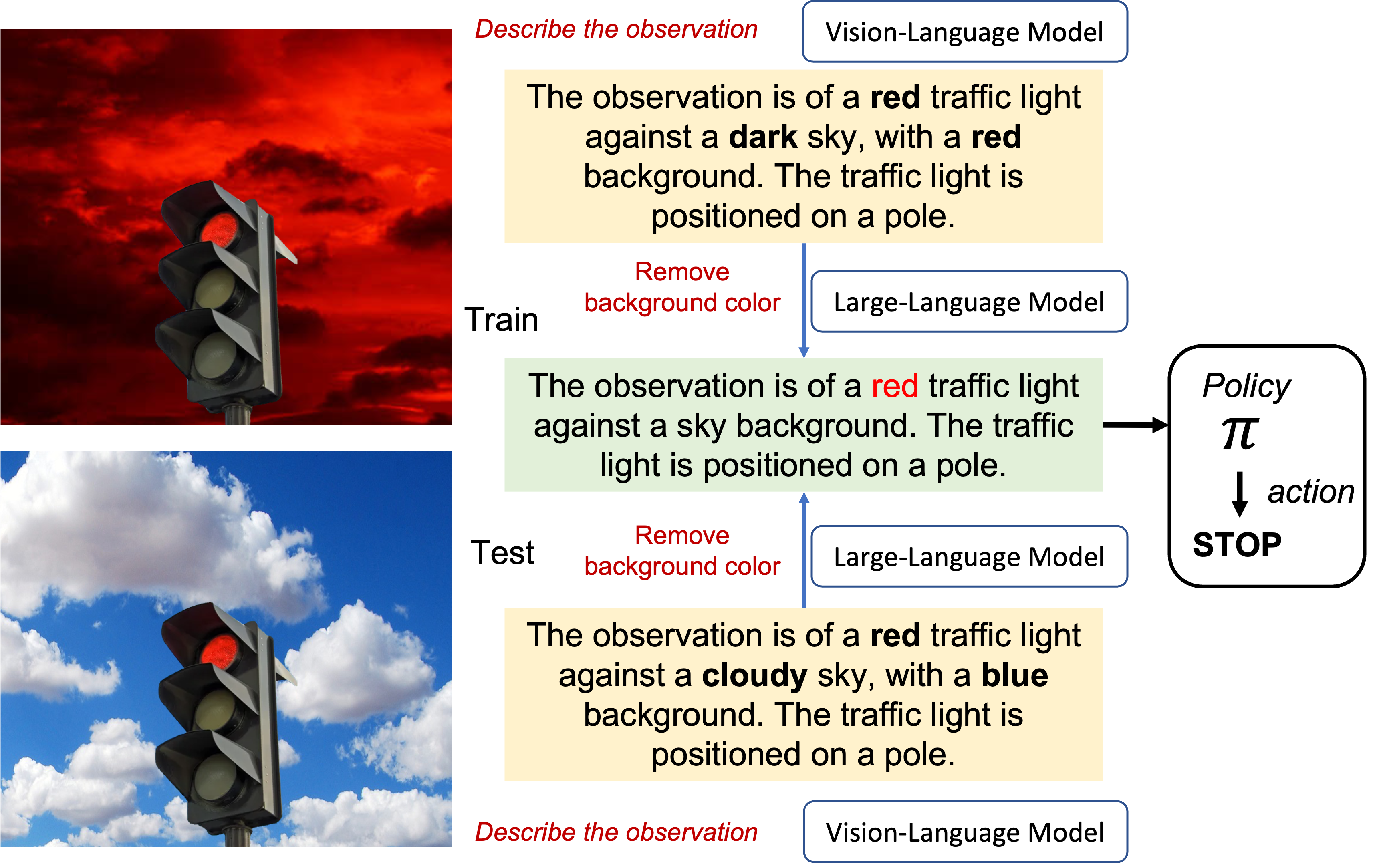

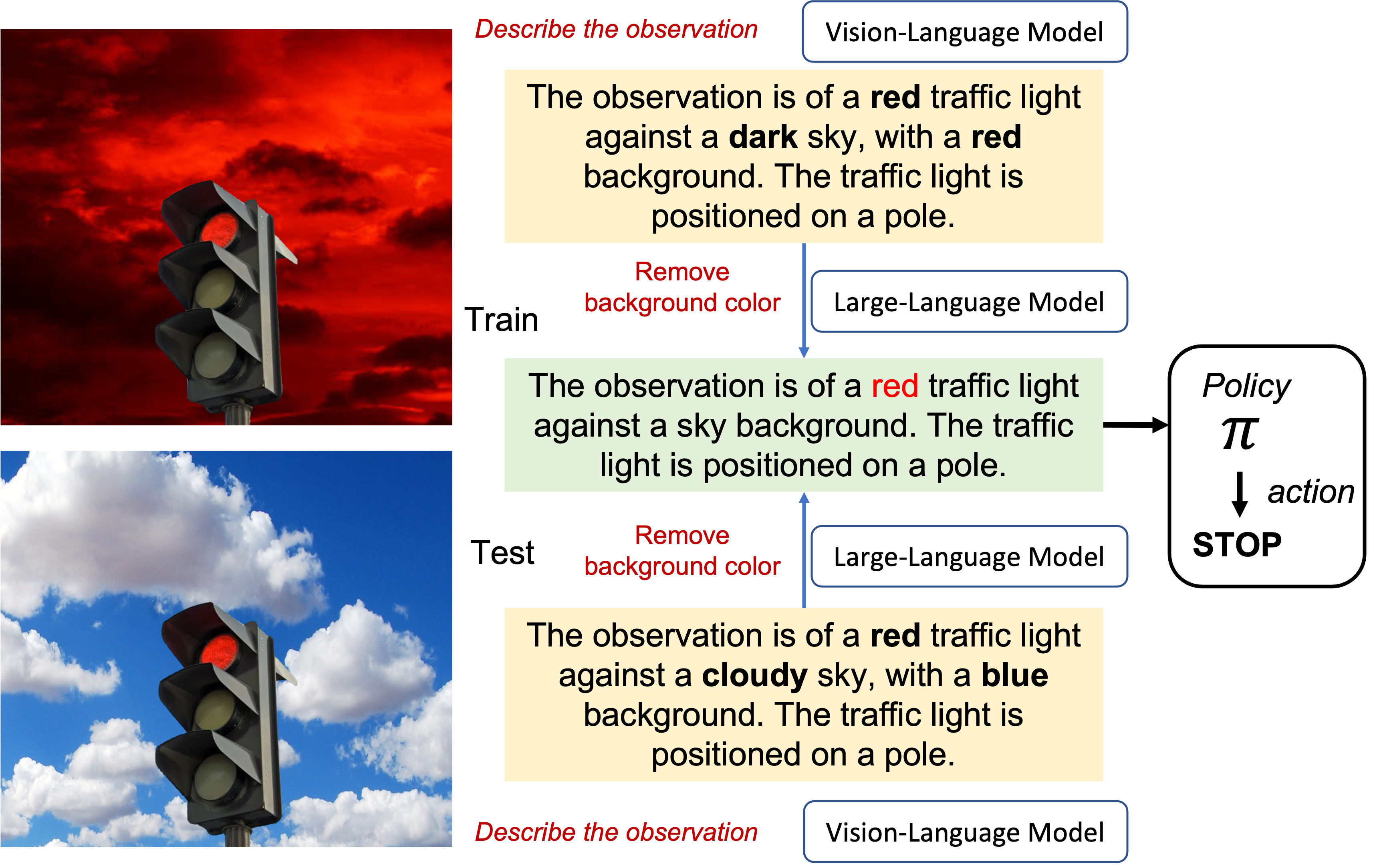

I focus on decision-making derived from language descriptions of visuals (e.g., images). The method is to first compress the visual information (i.e., pixels) into natural language and use this language as state information to learn policy with reinforcement learning (Rahman & Xue, 2024). This approach has several advantages. For instance, the language representation is inherently interpretable, providing a more accurate indication of what the agent understands from the visual scene. In this setup, the agent can learn from a natural language description of the image. This approach provides multiple benefits. Primarily, the representation is easily interpretable by humans, unlike raw pixel data from the image. Moreover, it paves the way for harnessing the immense processing power of large language models (LLMs) to handle natural language state information. For instance, unnecessary features, such as color information, can be filtered out by directing the LLM to ignore them (e.g., prompt: rewrite the description excluding color information).

The results indicated that policies trained using natural language descriptions of images exhibited superior generalization compared to those trained directly from images. Moreover, our language-based state representation is inherently interpretable compared to directly learning from pixels, indicating a strong use case for language-based state representation.