Masudur Rahman

Postdoctoral Researcher, Purdue University| Ph.D. in Computer Science, Purdue (2024)

Email: rahman64@purdue.edu

I study how intelligent systems can adapt reliably when environments, objectives, and assumptions change—especially in high-stakes real-world settings such as healthcare and robotics.

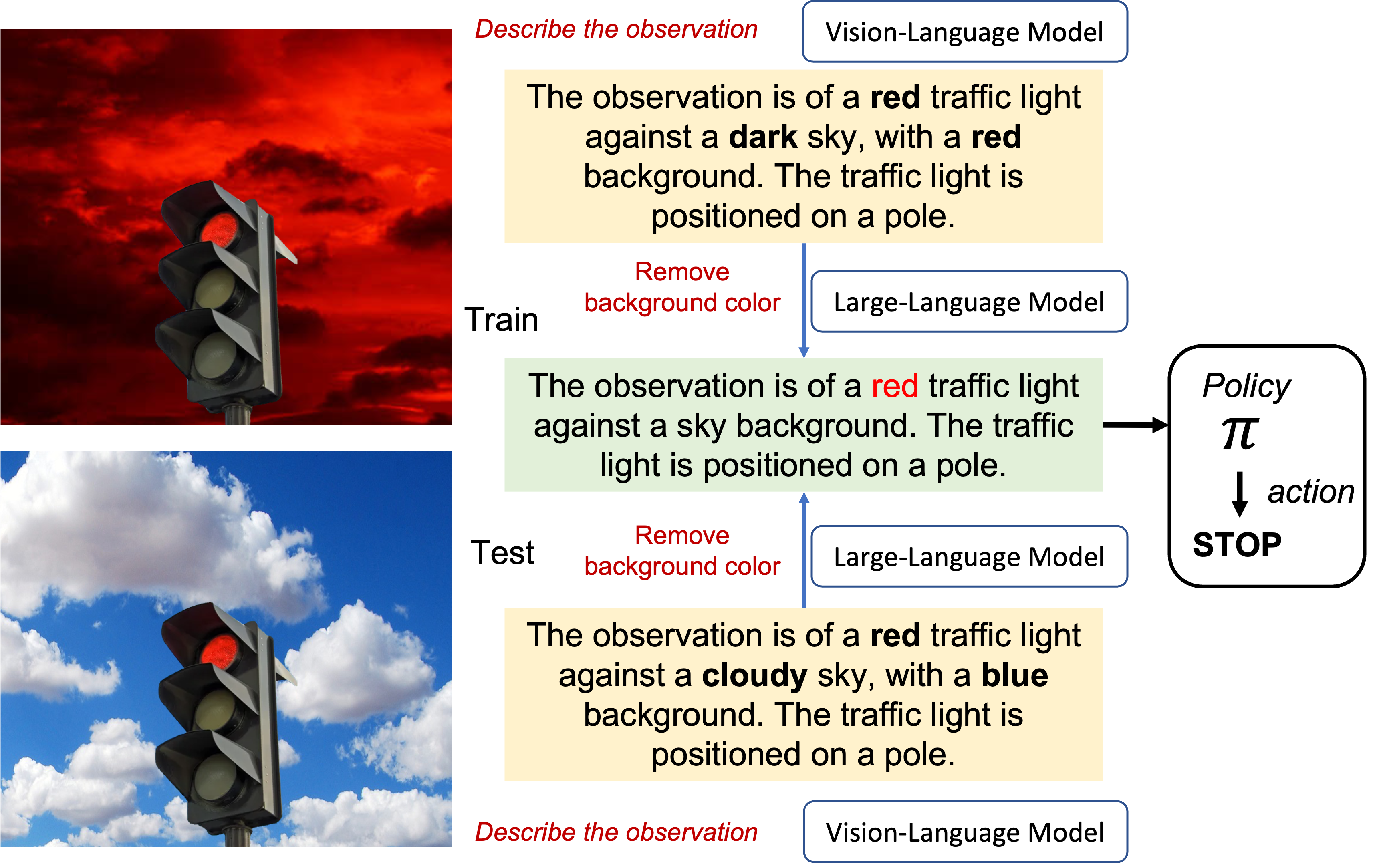

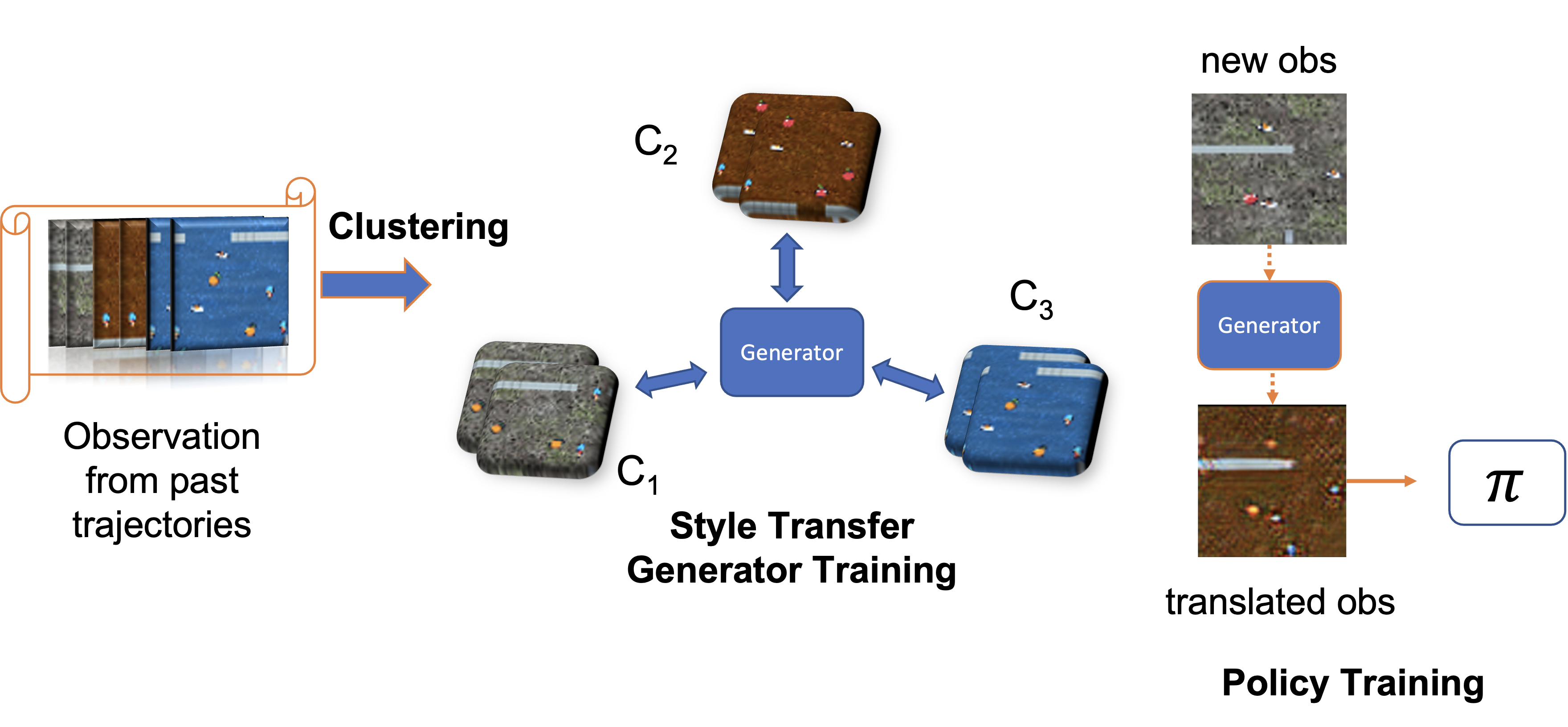

My research is motivated by a recurring failure mode of modern AI systems: strong performance under static or controlled conditions often collapses when systems are deployed in dynamic environments where data is limited, objectives are underspecified, and mistakes are costly. Rather than treating these failures as edge cases, my work treats change itself as the defining condition of intelligence.

I focus on reinforcement learning as a foundation for adaptive decision-making, and develop principled methods that integrate learning with semantic representations, structured reasoning, and embodied interaction. Across theory, algorithms, and deployed systems, my goal is to understand why adaptation fails in practice and how intelligent systems can remain meaningful and reliable when assumptions break.

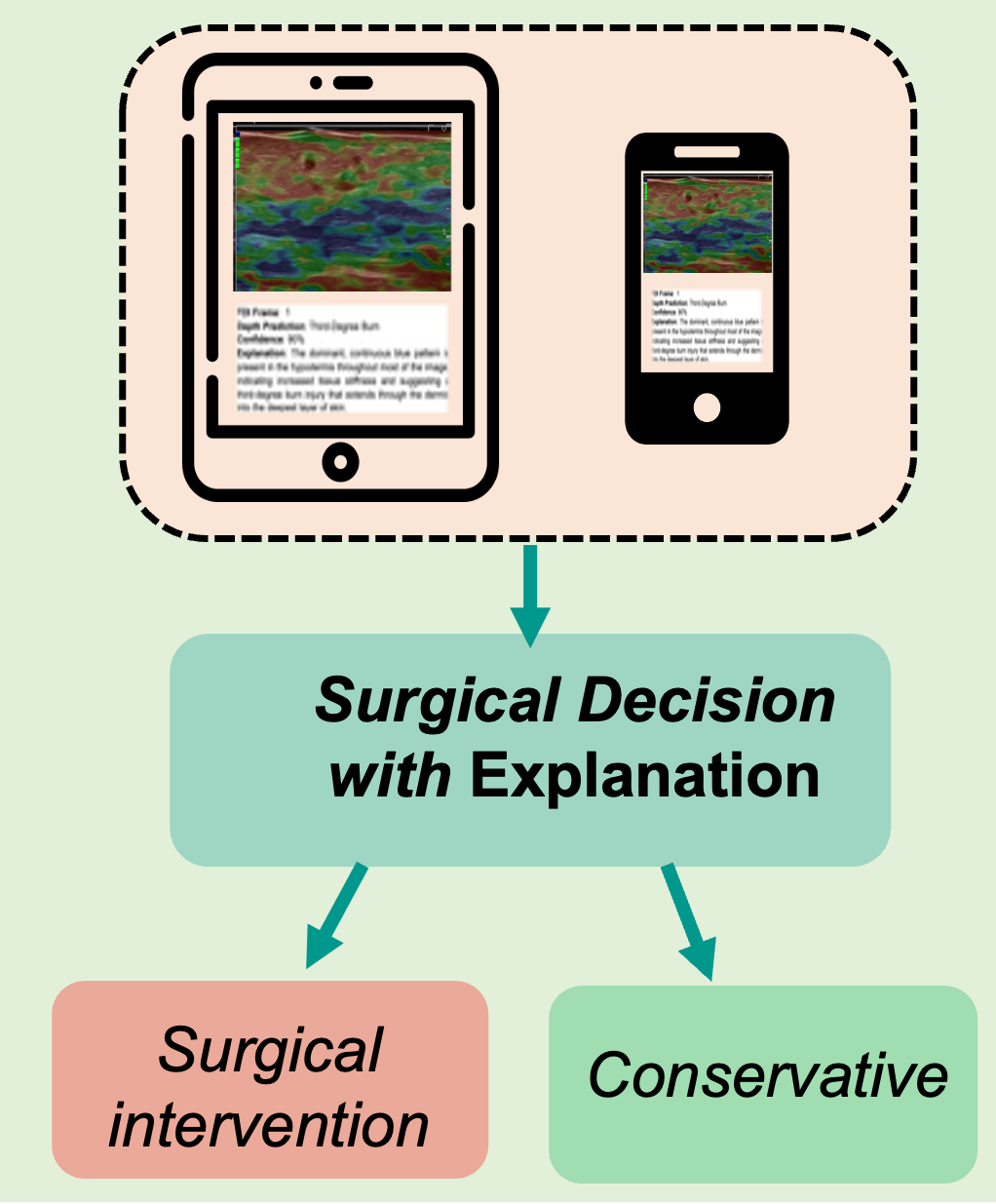

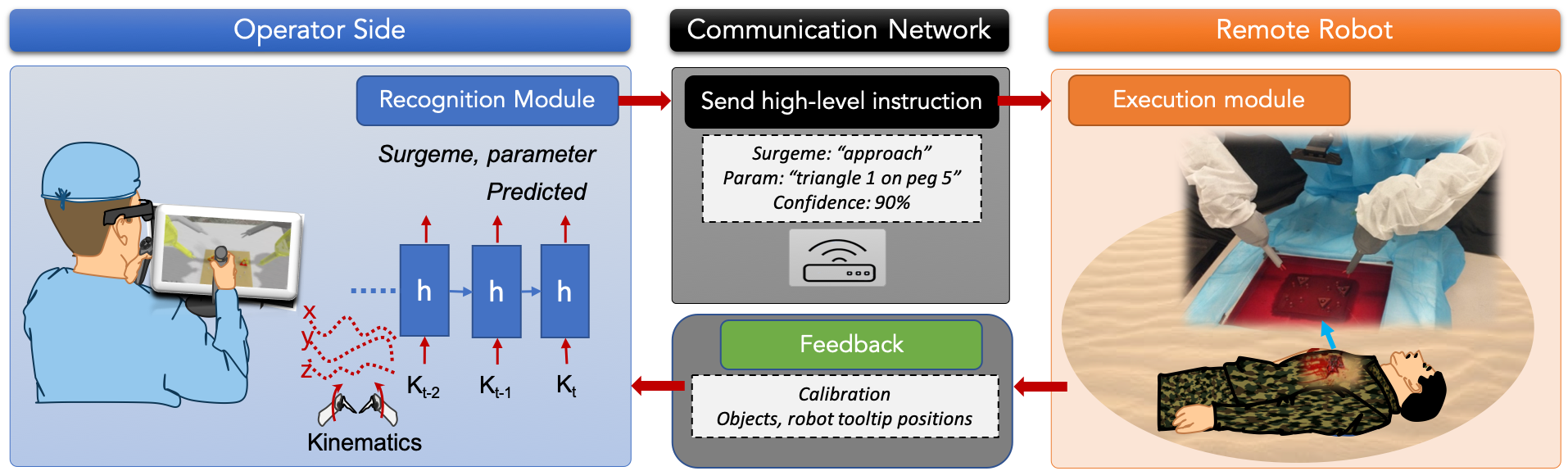

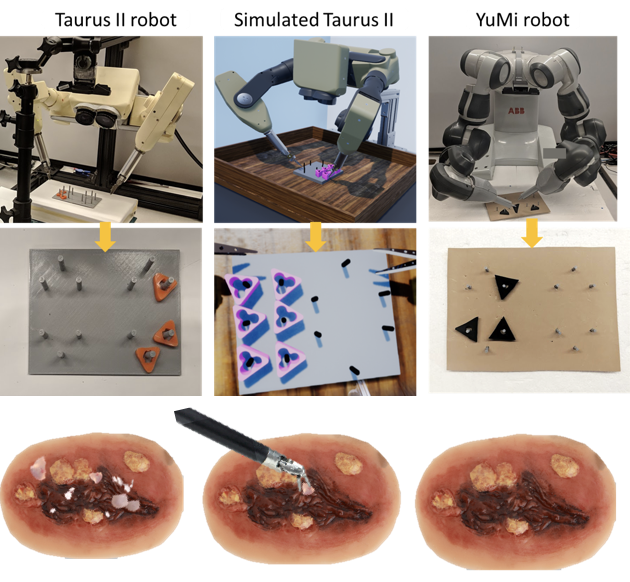

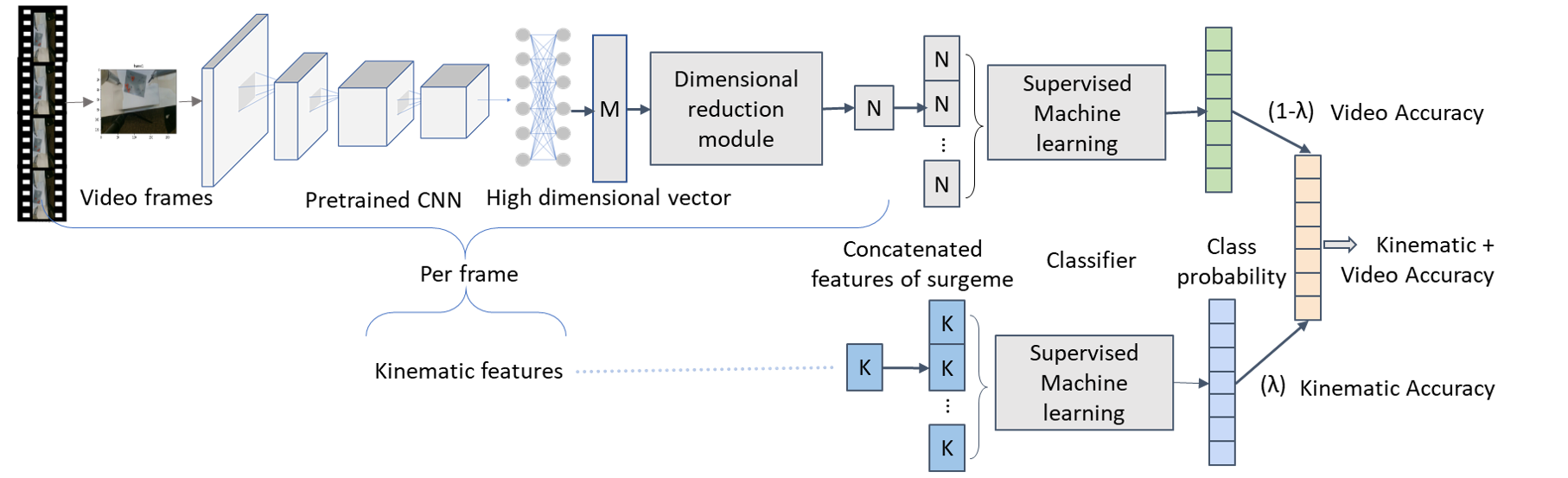

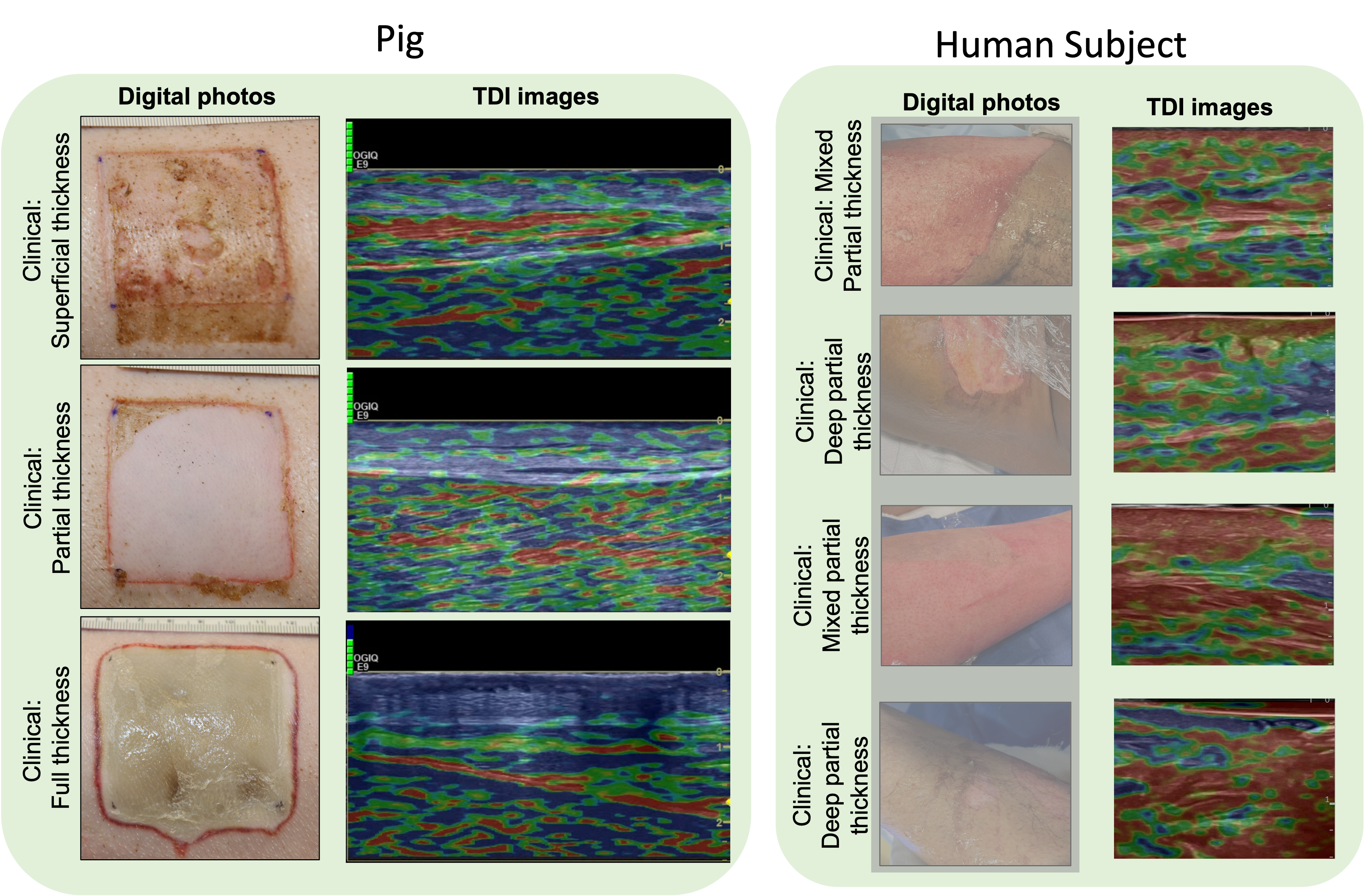

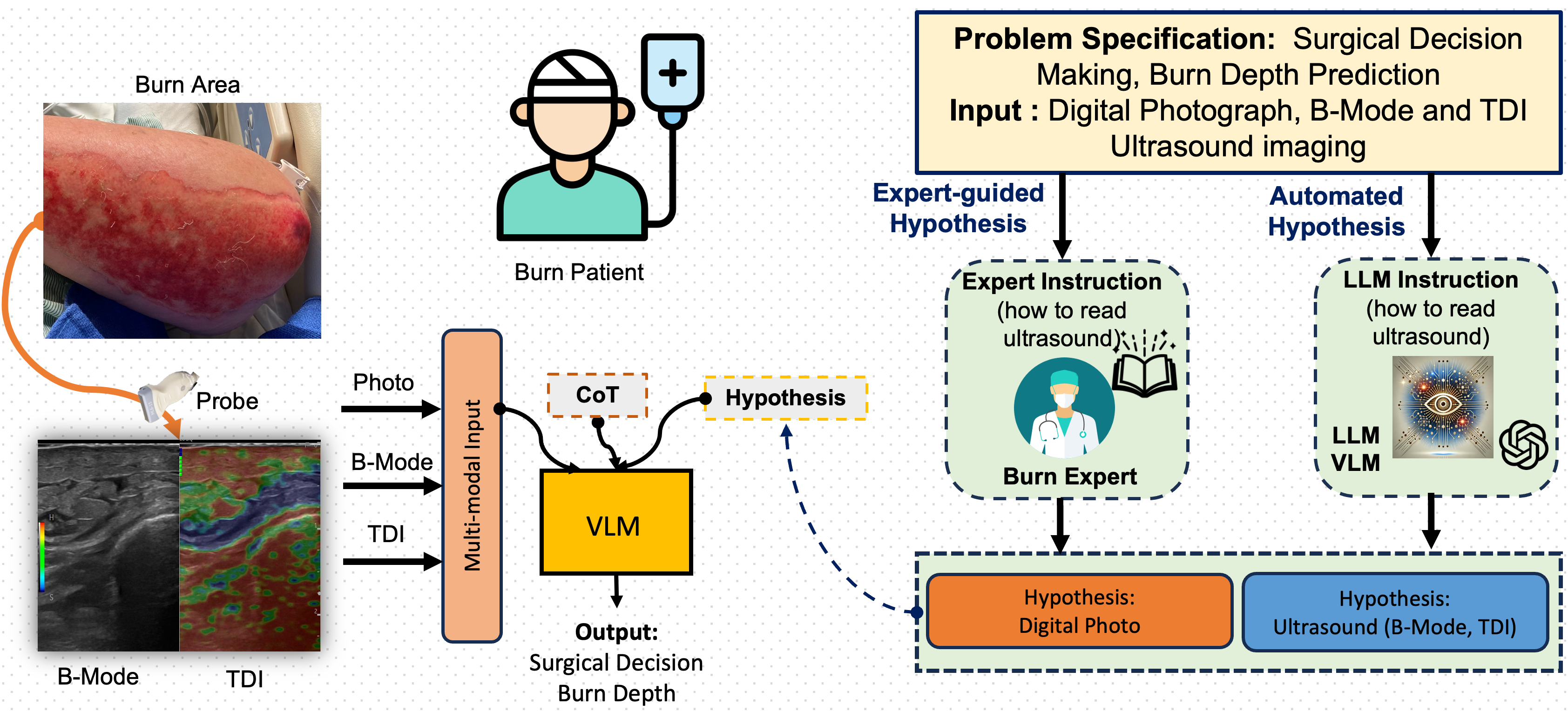

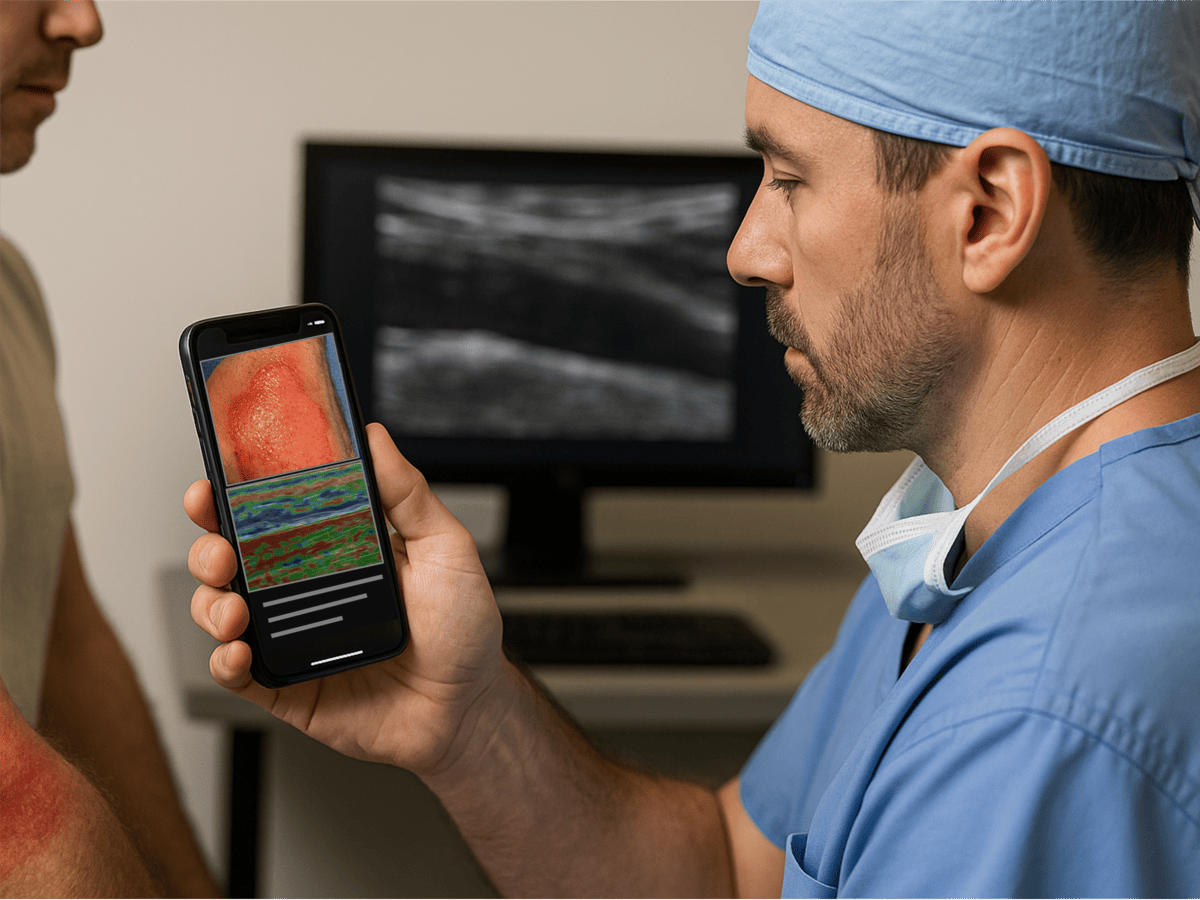

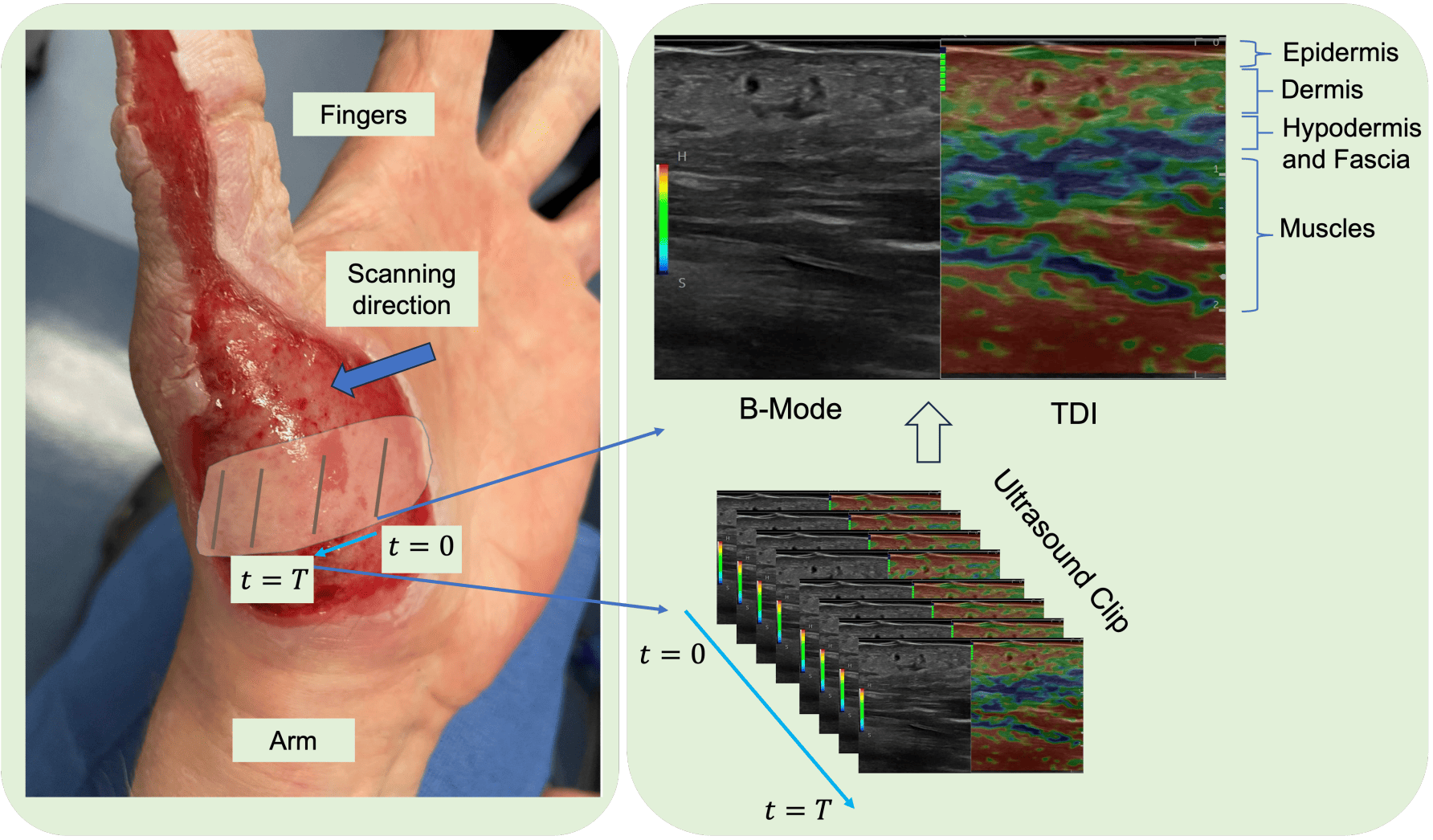

My work emphasizes real-world impact in AI for healthcare and autonomous robotics. For example, I developed a burn-diagnosis system that achieved 95% accuracy on real patient data, substantially outperforming traditional clinician-only decision processes. In robotics, I designed a teleoperation framework for remote surgical assistance that maintained stable control under network delays of up to five seconds—far exceeding the limits of conventional systems.

I earned my Ph.D. in Computer Science from Purdue University in 2024, where I was advised by Dr. Yexiang Xue, and am currently a Postdoctoral Researcher at Purdue working with Dr. Juan P. Wachs. Previously, I earned my M.S. in Computer Science from the University of Virginia and a B.Sc. in Computer Science and Engineering from BUET, and served as a Lecturer at BRAC University.

news

| Dec 12, 2025 | ✨ Awarded NAIRR Compute Resources — Allocated $25K in OpenAI credits and 50K high-capacity GPU hours through the National Artificial Intelligence Research Resource (NAIRR) Pilot; Role: Lead Member. |

|---|---|

| Dec 08, 2025 | 🏛️ Panelist: U.S. National Science Foundation Translation to Practice (NSF TTP) Program (January–February 2026) — An NSF program supporting the translation of research outcomes into practice. |

| Dec 06, 2025 | 📍Attended NeurIPS 2025 in San Diego, California. |

| Nov 13, 2025 | 🎤 Gave an invited talk at the University of Texas at El Paso on AI for High-Stake Environments. The lecture was delivered for the course Artificial Intelligence for Scientific Discovery (Fall 2025), highlighting current challenges and opportunities for developing reliable AI in Healthcare. |

| Nov 10, 2025 | 🌟 Featured in the Purdue Postdoc Achievements series showcasing groundbreaking discoveries and professional accomplishments by Purdue’s postdoctoral scholars. |

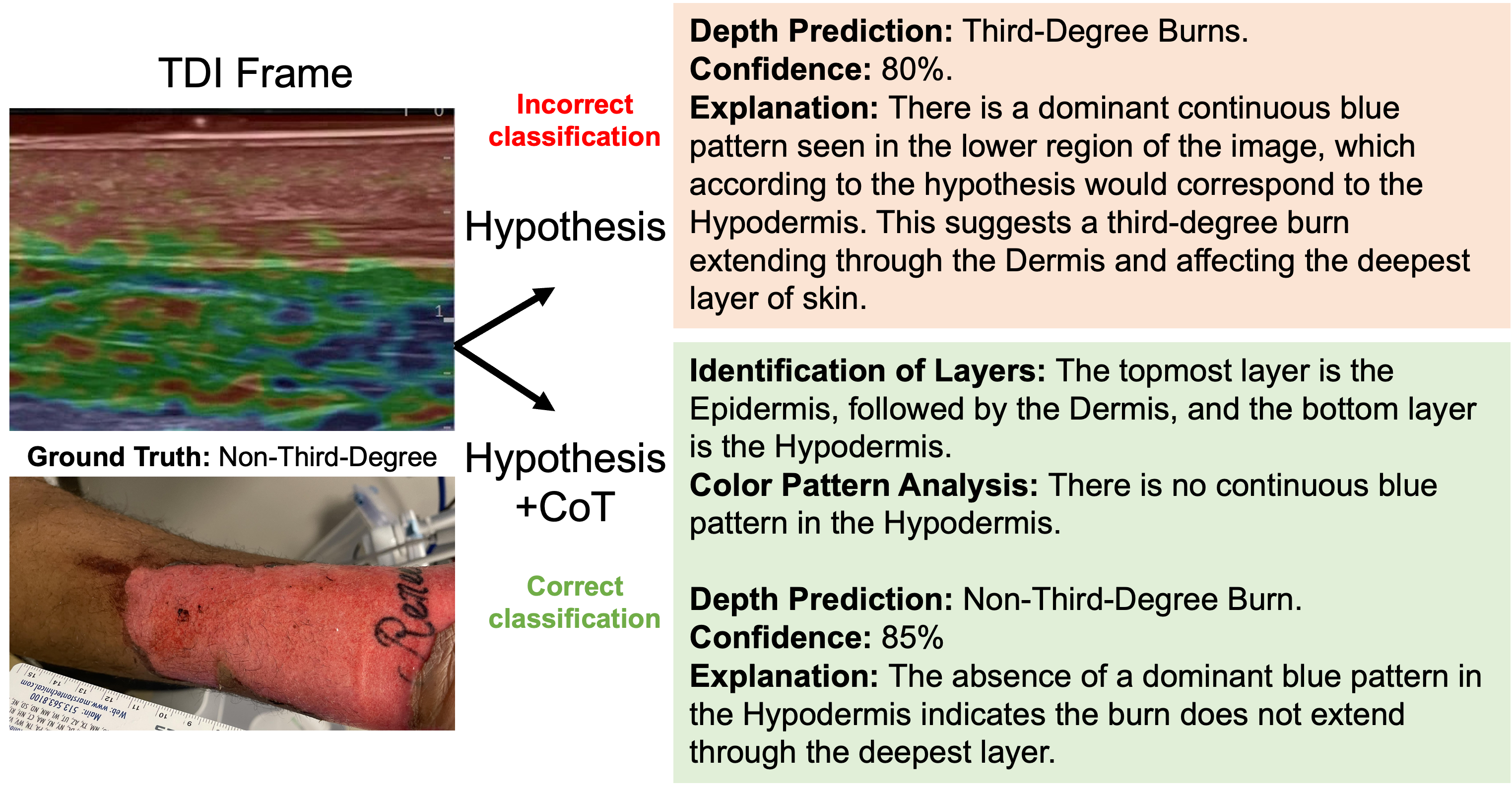

| Oct 13, 2025 | 📑 A paper titled “Automated Unified Reasoning with Vision-Language Models for Multi-modal Burn Assessment” has been accepted for presentation at Innovative Applications of Artificial Intelligence (IAAI-26), Oral, Acceptance rate 15%. AAAI 2026. |

| Oct 06, 2025 | 🏛️ Panelist: Reviewer Panel for the NAIRR Pilot (Oct–Dec 2025) — The National Artificial Intelligence Research Resource (NAIRR) is an NSF-led initiative, in collaboration with the DOE, NIH, and other agencies, supporting AI research and infrastructure development across the United States. |

| Sep 25, 2025 | 📑 Excited to present our latest research on reinforcement learning, vision-language reasoning, and AI for healthcare at the Workshops of the Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025), including the Aligning Reinforcement Learning Experimentalists and Theorists (ARLET), Multimodal Algorithmic Reasoning (MAR), GenAI for Health (GenAI4Health), and Embodied World Models for Decision Making (EWM) workshops. |

| Aug 18, 2025 | 📰 Featured in IE@Purdue News and Media — Best Paper Award on Burn Diagnosis, LinkedIn, X/Twitter |

| Aug 01, 2025 | ✨ Awarded NSF Robust Intelligence (RI) Grant — EAGER: Theoretical Foundations for Integrating Foundational Models into Reinforcement Learning (Award #2521982), $300,000, 2025–2027, PI: Juan P. Wachs, serving as Key Personnel. Excited to see support for this project I’ve been working on for quite a while. Grateful for the opportunity to take it further! |

| Jul 30, 2025 | 🔗 Affiliated with CARE — Founding member of the Center for AI and Robotic Excellence in Medicine (CARE) at Purdue University. |

| Jul 17, 2025 | 🏆 Awarded Best Paper Award (Full Paper Poster Category) at MIUA 2025 for the paper titled: Knowledge-Driven Hypothesis Generation for Burn Diagnosis from Ultrasound with Vision- Language Model. |

| Jul 15, 2025 | Attending MIUA 2025 at the University of Leeds, UK. |

| Jun 24, 2025 | 📑 A paper got accepted to the JMIR Medical Informatics 2025. Title: AI-Driven Integrated System for Burn Depth Prediction With Electronic Medical Records: Algorithm Development and Validation. |

| Jun 23, 2025 | ✨ Awarded a grant through the Health of the Forces Pilot Funding Program (Purdue University) for our project “Accelerated Expertise: AI-Powered Diagnostic Pathways for Rapid Clinical Mastery of Burns,” in collaboration with Dr. Juan Wachs (Industrial Engineering) and Dr. Aniket Bera (Computer Science). This project aims to enhance acute care and long-term outcomes for burn-injured service members using AI-powered diagnostic tools. Excited to continue working at the intersection of AI and burn care! |

| May 25, 2025 | 📑 An abstract paper has been accepted to the Plastic Surgery The Meeting (PSTM) 2025. The work will be presented at PSTM 2025 — the premier annual conference organized by the American Society of Plastic Surgeons (ASPS)— at the New Orleans, Louisiana, in October 2025. |

| May 20, 2025 | 📑 An abstract paper has been accepted to the Military Health System Research Symposium (MHSRS) 2025. Paper title: A Chain-of-Thought AI Reasoning Framework for Burn Diagnosis. The work will be presented at MHSRS (the leading forum for military health research) at the Gaylord Palms Resort and Convention Center in Kissimmee, FL, in August 2025. |

| May 12, 2025 | 📑 A paper has been accepted to the Annual Conference on Medical Image Understanding and Analysis (MIUA) 2025. Paper title: Knowledge-Driven Hypothesis Generation for Burn Diagnosis from Ultrasound with a Vision-Language Model. Attending MIUA in July in Leeds, UK. |

| Mar 17, 2025 | 📑 A paper got accepted to the Military Medicine Journal 2025. Paper title: A Framework for Advancing Burn Assessment with Artificial Intelligence. |

| Nov 27, 2024 | 🏁 Completed NSF I-Corps Hub: Great Lakes Region. Digital Badge. |

| Nov 04, 2024 | 🚀 Started my Postdoc at Purdue University, Edwardson School of Industrial Engineering. The program is currently ranked 1st for online master’s, 2nd for undergraduate, and 3rd for graduate Industrial Engineering by the U.S. News & World Report 2025. |

| Sep 24, 2024 | 🎓 Defended my Ph.D. Thesis. |

| Jul 31, 2024 | 🪧 Presenting a paper on AI for burn care at the Military Health System Research Symposium (MHSRS) 2024 in August, Kissimmee, FL. |

| Jul 31, 2024 | 🎤 Lightning talk on AI in Burn Surgery at ADSA 2024 in October at University of Michigan, Ann Arbor |

| Jul 31, 2024 | 🎤 Tutorial session on RL Benchmarking at ADSA 2024 in October at University of Michigan, Ann Arbor |

| Jul 17, 2024 | 📋 🎤 NAACL 2024: Organizer and Chair of the Birds of a Feather (BoF) session on Vision-Language Models in Medical Surgery. |

| May 21, 2024 | 🎤 Lightning talk on Vision-Language Model in Deep RL at MMLS 2024. |

selected publications

- Annals of Surgery

Automated Non-Invasive Burn Diagnostic System for Healthcare using Artificial Intelligence: AMBUSH-AIAnnals of Surgery, under revision, 2025Impact Factor = 6.4, *Equal Contribution

Automated Non-Invasive Burn Diagnostic System for Healthcare using Artificial Intelligence: AMBUSH-AIAnnals of Surgery, under revision, 2025Impact Factor = 6.4, *Equal Contribution - NeurIPS-25 Workshop

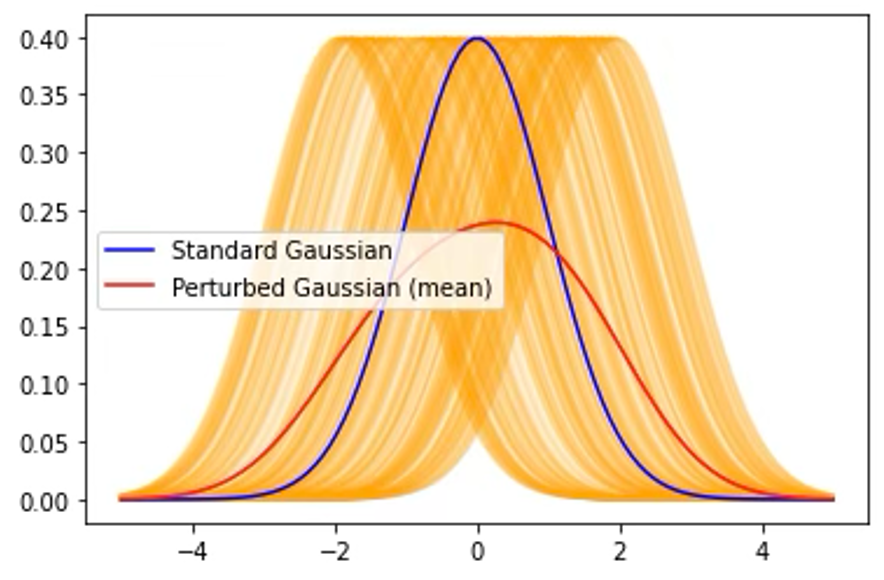

Robust Policy Gradient Optimization through Action Parameter Perturbation in Reinforcement LearningIn Proceedings of the Aligning Reinforcement Learning Experimentalists and Theorists Workshop (ARLET@NeurIPS 2025) at the Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025) , 2025

Robust Policy Gradient Optimization through Action Parameter Perturbation in Reinforcement LearningIn Proceedings of the Aligning Reinforcement Learning Experimentalists and Theorists Workshop (ARLET@NeurIPS 2025) at the Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025) , 2025 - NeurIPS-25 Workshop

Intent-Based Reward Inference for Value-Aligned Reinforcement LearningIn Proceedings of the Aligning Reinforcement Learning Experimentalists and Theorists Workshop (ARLET@NeurIPS 2025) at the Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025) , 2025

Intent-Based Reward Inference for Value-Aligned Reinforcement LearningIn Proceedings of the Aligning Reinforcement Learning Experimentalists and Theorists Workshop (ARLET@NeurIPS 2025) at the Thirty-Ninth Annual Conference on Neural Information Processing Systems (NeurIPS 2025) , 2025 - NeurIPS-25 Workshop

Improvisational Reasoning with Vision-Language Models for Grounded Procedural PlanningIn Proceedings of the Thirty-Ninth Annual Conference on Neural Information Processing Systems Workshops (NeurIPS 2025): Multimodal Algorithmic Reasoning Workshop (MAR@NeurIPS 2025), GenAI for Health: Potential, Trust, and Policy Compliance (GenAI4Health@NeurIPS 2025), Embodied World Models for Decision Making (EWM@NeurIPS 2025) , 2025

Improvisational Reasoning with Vision-Language Models for Grounded Procedural PlanningIn Proceedings of the Thirty-Ninth Annual Conference on Neural Information Processing Systems Workshops (NeurIPS 2025): Multimodal Algorithmic Reasoning Workshop (MAR@NeurIPS 2025), GenAI for Health: Potential, Trust, and Policy Compliance (GenAI4Health@NeurIPS 2025), Embodied World Models for Decision Making (EWM@NeurIPS 2025) , 2025 - NeurIPS-25 Workshop

Vision-Language Reasoning for Burn Depth Assessment with Structured Diagnostic HypothesesIn Proceedings of the Thirty-Ninth Annual Conference on Neural Information Processing Systems Workshops (NeurIPS 2025): GenAI for Health: Potential, Trust, and Policy Compliance (GenAI4Health@NeurIPS 2025), Embodied World Models for Decision Making (EWM@NeurIPS 2025) , 2025

Vision-Language Reasoning for Burn Depth Assessment with Structured Diagnostic HypothesesIn Proceedings of the Thirty-Ninth Annual Conference on Neural Information Processing Systems Workshops (NeurIPS 2025): GenAI for Health: Potential, Trust, and Policy Compliance (GenAI4Health@NeurIPS 2025), Embodied World Models for Decision Making (EWM@NeurIPS 2025) , 2025 - JMIR

AI-Driven Integrated System for Burn Depth Prediction With Electronic Medical Records: Algorithm Development and ValidationJMIR Medical Informatics, 2025

AI-Driven Integrated System for Burn Depth Prediction With Electronic Medical Records: Algorithm Development and ValidationJMIR Medical Informatics, 2025 - MIUA

Knowledge-Driven Hypothesis Generation for Burn Diagnosis from Ultrasound with Vision- Language ModelIn Annual Conference on Medical Image Understanding and Analysis , 2025

Knowledge-Driven Hypothesis Generation for Burn Diagnosis from Ultrasound with Vision- Language ModelIn Annual Conference on Medical Image Understanding and Analysis , 2025 - MHSRS-Abstract

A Chain-of-Thought AI Reasoning Framework for Burn DiagnosisIn Military Health System Research Symposium , 2025

A Chain-of-Thought AI Reasoning Framework for Burn DiagnosisIn Military Health System Research Symposium , 2025